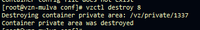

I'm in the middle of retiring a HWN right now, and I migrated about 20 containers from QA over to the replacement, one was a copy of a personal CT. All went fine until I went to remove a low numbered container and vzctl removed one with a larger number. Happened to be the copy of my CT that it erased. That one, coincidentally, is an older Ploop device. The CTID is not a coincidence.

[root@vzn-mulva conf]# ls -l /vz/root/1337

ls: cannot access /vz/root/1337: No such file or directory

I don't know whether it's Ploop, vzctl or something drastically wrong with their newest kernel, so I'm blowing it away and starting fresh. Too risky. Just a heads-up for now. Keep an eye on vzctl.log, and FTLOG, if you don't have vzdump set up at the very least, get crackin'.

Peace.

[root@vzn-mulva conf]# ls -l /vz/root/1337

ls: cannot access /vz/root/1337: No such file or directory

I don't know whether it's Ploop, vzctl or something drastically wrong with their newest kernel, so I'm blowing it away and starting fresh. Too risky. Just a heads-up for now. Keep an eye on vzctl.log, and FTLOG, if you don't have vzdump set up at the very least, get crackin'.

Peace.

Last edited by a moderator: