wlanboy

Content Contributer

After thinking about and coding a I am now building up my own geo database service.

The goal - if reachable - would be a selfmade geolocation based service to redirect website calls to a closer server.

I want to talk about my first steps and hopefully will get some input about different open items.

First step is to create a list of countries and (US) states and their geo location (using midpoints).

I have finished that point - but hopefully someone can point me to a open source based list of geo locations of all cities.

The workflow for the application is:

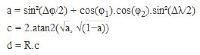

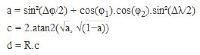

Distant calculation can be done by the haversine formula:

Parameters are:

Perfect match for map/reduce functionality of MongoDB.

Maxmind sometimes has a quite high miss rate of geo locations. Like ips in Detroit which are located in Kansas... maybe because I am using the free edition.

To provide a first sight to my project I have created a form where you can set one ip and three server locations - the closest server location will be returned.

The goal - if reachable - would be a selfmade geolocation based service to redirect website calls to a closer server.

I want to talk about my first steps and hopefully will get some input about different open items.

First step is to create a list of countries and (US) states and their geo location (using midpoints).

I have finished that point - but hopefully someone can point me to a open source based list of geo locations of all cities.

The workflow for the application is:

- Use geolite2 from Maxmind to get longitude and latitude of IP (alternatives?)

- Search for the closest country (later city) to the geo point returned by Maxmind

Distant calculation can be done by the haversine formula:

Parameters are:

- φ latitude

- λ longitude

- R radius of earth

Perfect match for map/reduce functionality of MongoDB.

Maxmind sometimes has a quite high miss rate of geo locations. Like ips in Detroit which are located in Kansas... maybe because I am using the free edition.

To provide a first sight to my project I have created a form where you can set one ip and three server locations - the closest server location will be returned.