This is the first of what I hope will be a series of posts on the topic of Zabbix. For those not in the know, Zabbix is a free and open source piece of monitoring software with all the features of an enterprise solution. All this advice should be taken with a grain of salt and is based on our experiences over the past year. You mileage may vary.

When should I consider the scalability of my monitoring system?

Well, ideally you should plan for the future from the start but since this rarely happens you should begin planning no later than 100-200 values per second. Performance is dependent on many factors, including the number of checks you are performing on proxies (or agents) vs the number of simple checks. We use many simple checks so we hit our first issues at 100 items/s.

What hardware should I be looking at?

The best thing you can do for this software is to ensure its database is stored on a SSD. This alone will increase your performance more than you would believe. We use a 60GB plan from DigitalOcean and have been very impressed with the IOPS.

At around 150-200 items a second you should hit a point that the housekeeper can not delete enough records a second when completing with the insert mutexes (lock contention). At this point you will need to introduce partitioning on your history tables. Now you can either partition and keep the existing housekeeper or write your own housekeeper that runs via dropping partitions. If you have items with varying history storage periods you will most likely need to choose the first solution.

An example of what we use is below:

CREATE TABLE `history_uint` (

`itemid` bigint(20) unsigned NOT NULL,

`clock` int(11) NOT NULL DEFAULT '0',

`value` bigint(20) unsigned NOT NULL DEFAULT '0',

`ns` int(11) NOT NULL DEFAULT '0',

KEY `history_uint_1` (`itemid`,`clock`)

) ENGINE=InnoDB DEFAULT CHARSET=latin1

/*!50100 PARTITION BY HASH (clock DIV 86400)

PARTITIONS 40 */

40 partitions was chosen as most of our data is stored for 30-40 days. This ensures data is always being inserted far away from where the housekeeping process is purging rows. You should partition all the tables you use extensively including trends and events as applicable.

As InnoDB does not recover unused table space if you create too many partitions (and I assume like any sane person you are using file per table) it will result in disk space wastage.

Be aware adding partitioning will take many hours on multi-gb tables. Factor this into your plan if applicable.

What software should I be looking at?

If possible check out the Zabbix 2.1 (or soon 2.2) branch. Its currently in beta but the performance improvements are exceptional. From our experience 2.1 (Beta 2) is bug free, or atleast the features we are using are.

For maximum performance we run Percona MySQL 5.5.

If you heavily utilize the API ensure that you have an opcode cache such as APC setup.

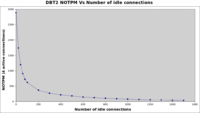

So how far can this scale?

Who knows? This new setup has us sitting with a load average of below 1.0. The old setup was over 15 (i7, 4GB ram, 2x500GB raid 1 spinning rust bucket) and well and truly overloaded.

Like this tutorial? Be sure to let me know. Any requests?

When should I consider the scalability of my monitoring system?

Well, ideally you should plan for the future from the start but since this rarely happens you should begin planning no later than 100-200 values per second. Performance is dependent on many factors, including the number of checks you are performing on proxies (or agents) vs the number of simple checks. We use many simple checks so we hit our first issues at 100 items/s.

What hardware should I be looking at?

The best thing you can do for this software is to ensure its database is stored on a SSD. This alone will increase your performance more than you would believe. We use a 60GB plan from DigitalOcean and have been very impressed with the IOPS.

At around 150-200 items a second you should hit a point that the housekeeper can not delete enough records a second when completing with the insert mutexes (lock contention). At this point you will need to introduce partitioning on your history tables. Now you can either partition and keep the existing housekeeper or write your own housekeeper that runs via dropping partitions. If you have items with varying history storage periods you will most likely need to choose the first solution.

An example of what we use is below:

CREATE TABLE `history_uint` (

`itemid` bigint(20) unsigned NOT NULL,

`clock` int(11) NOT NULL DEFAULT '0',

`value` bigint(20) unsigned NOT NULL DEFAULT '0',

`ns` int(11) NOT NULL DEFAULT '0',

KEY `history_uint_1` (`itemid`,`clock`)

) ENGINE=InnoDB DEFAULT CHARSET=latin1

/*!50100 PARTITION BY HASH (clock DIV 86400)

PARTITIONS 40 */

40 partitions was chosen as most of our data is stored for 30-40 days. This ensures data is always being inserted far away from where the housekeeping process is purging rows. You should partition all the tables you use extensively including trends and events as applicable.

As InnoDB does not recover unused table space if you create too many partitions (and I assume like any sane person you are using file per table) it will result in disk space wastage.

Be aware adding partitioning will take many hours on multi-gb tables. Factor this into your plan if applicable.

What software should I be looking at?

If possible check out the Zabbix 2.1 (or soon 2.2) branch. Its currently in beta but the performance improvements are exceptional. From our experience 2.1 (Beta 2) is bug free, or atleast the features we are using are.

For maximum performance we run Percona MySQL 5.5.

If you heavily utilize the API ensure that you have an opcode cache such as APC setup.

So how far can this scale?

Who knows? This new setup has us sitting with a load average of below 1.0. The old setup was over 15 (i7, 4GB ram, 2x500GB raid 1 spinning rust bucket) and well and truly overloaded.

Like this tutorial? Be sure to let me know. Any requests?

Last edited by a moderator: